Cost Efficient Scheduled Batch Tasks on Google Cloud Platform

The free tier on services like AWS Lambda and Google App Engine are tempting alternatives to traditional cheap Virtual Private Servers (VPS). However, these services often place additional restrictions on what kind of software is run. For instance Lambda functions can only be invoked through the Lambda API or through the Lambda API Gateway service. Additionally often only older runtime environments are made available in both App Engine and Lambda. There may also be restrictions on the length of time that code may take to execute.

It can help to consider whether the problem being solved requires machines to run constantly. If we can solve our problem with programs that only need to be run periodically then it is possible to utilise these cost-efficient services while not limiting our repertoire of technologies.

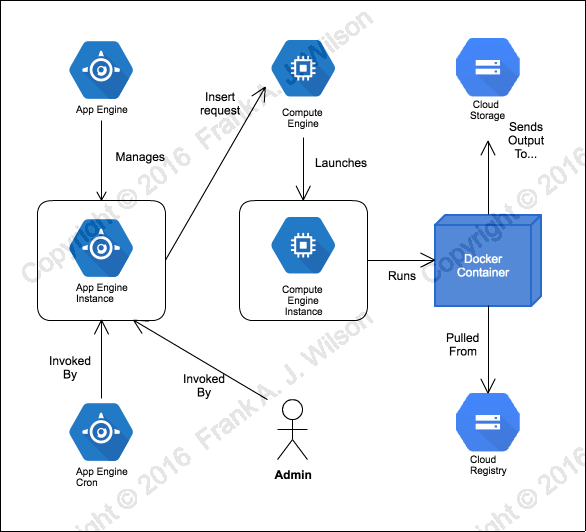

In this post we will discuss one example of using Google App Engine to launch tasks periodically on Google Compute Engine. We should note first that App Engine does offer backend instance types that can be configured to lift some of the constraints that frontend instances are restricted to. However sometimes it is necessary to run software that is not designed for the App Engine environment and this is what our main motivation will be for this article.

On the other hand it is important to hide details of the software we are running from the App Engine service that is going to launch it. This will allow the App Engine service code to be reusable for launching all kinds of tasks. We will show in this article how Docker can be used to achieve this.

Our solution will have three main components:

- An App Engine web-service to launch tasks

- A Compute Engine instance to run the task

- A Docker Image to encapsulate the running task

#The App Engine Service

The App Engine service will launch a compute instance in response to a POST request. If a GET request is received the service will respond with an HTML form that will submit the POST request. Exceptionally, if the service receives a GET request from the App Engine cron service we will treat it as we would a POST request from any other source. This is because contrary to HTTP 1.1 (RFC 7231) the App Engine cron service uses only GET (a 'safe' method) to deliberately initiate a side-effect. This is unfortunate because http clients (user agents) are more likely to invoke GET requests automatically (and cache responses) as result of the assumptions of the RFC. Unintentional and automatic launching of tasks is not desirable. It is especially concerning when we consider that safe methods to do not typically receive CSRF (Cross Site Request Forgery) protection. That means that if we allow a browser to invoke our service via GET a third party site could trick an administrator into launching tasks. The way that the cron service invokes tasks cannot be helped but we can mitigate by only allowing this behaviour when we can verify the request came from the App Engine cron service.

|

|

The header above is verified by App Engine service architecture so we can trust that it really does signify that cron is making the request.

We now focus our discussion on the how the App Engine service creates the compute instance using the Google Compute Engine API.

#The Compute Instance

To make a basic compute instance on App Engine we need to specify the following:

- A name

- A machine type - a URN that combines the zone (e.g. us-central1-f) and the instance type (f1-micro)

- A URL for a boot disk

- A network interface

In our case we will give our instances descriptive names that make the risk of

collisions unlikely. Specifically our scheme incorporate a human readable slug

like 'test' or 'payroll' the current date and time and a few random hex digits.

E.g. test-06121840-0f70.

We will also query the compute service 'image' endpoint to get the URL for a Debian Linux image:

|

|

The network interface we add will be a default external interface:

|

|

Additionally we will need specify:

- Service account - allows us to grant automatic permissions to an instance, (e.g. upload files to Google Cloud Storage)

- Startup script - to run actions as the instance starts

We will configure the default service account with the global access scope (i.e. do anything) to keep things simple.

|

|

In production you would use finer grained scopes that are appropriate for the task being run.

Our startup script will be rendered from a shell script template:

|

|

As can be seen above the script will install docker, pull a docker image URL

docker_image of our choice and run it with the given arguments

container_args. After the docker image has run we get the instance to delete

itself.

All this information is represented as a nested dictionary that forms the compute 'insert' request body.

|

|

#Tasks As Docker Images

We also need to devise a way package a task as a docker image. There are two parts to this. First we need to build the image which requires a Dockerfile and the actual software that need to be deployed. In our example we will deploy a shell script that creates a file containing the current time and uploads the file to path in google cloud storage given by a parameter. We'll use Docker to preinstall any software it calls (such as gsutil).

|

|

Once we've built the image we'll upload it to Google Container

Registry. This

service lets you push and pull Docker images to and from a Google Cloud Storage

(GCS) bucket where your compute instances can easily reach them. The buckets

are managed by the container registry service and generally have the form

artifacts.{your-default-bucket-name} which is usually

artifacts.{project-id}.appspot.com. We orchestrate the image building and

pushing process with GNU Make as follows:

|

|

This means after changing into image/ sub-directory we:

- Build the image as specified by the Dockerfile and tag it under the current users name.

- Create a remote tag in the Google Cloud Registry that correponds to the current user's tag.

- Push the remote tag to the registry.

#Conclusion

We have shown the building blocks to build a generic service that will launch small tasks on Google Compute Engine from Google App Engine. The completed example can be found on github.

We have developed ideas described in the Google Whitepaper Reliable task scheduling on Compute Engine into a more concrete and lightweight solution.

The solution presented is minimal and it could be developed in several areas. For instance when automating instance creation it is also important to monitor how the system is being used to control costs and avoid suprise bills!

In future articles we may cover interesting applications of these ideas to complement other google services.

#Updates

December 20th 2016: Google have deprecated 'bring your own' container registry buckets. Accordingly, a reference to using a 'bucket of your choice' as an alternative to the default was removed from this article.